Soft ABC

A first ABC algorithm

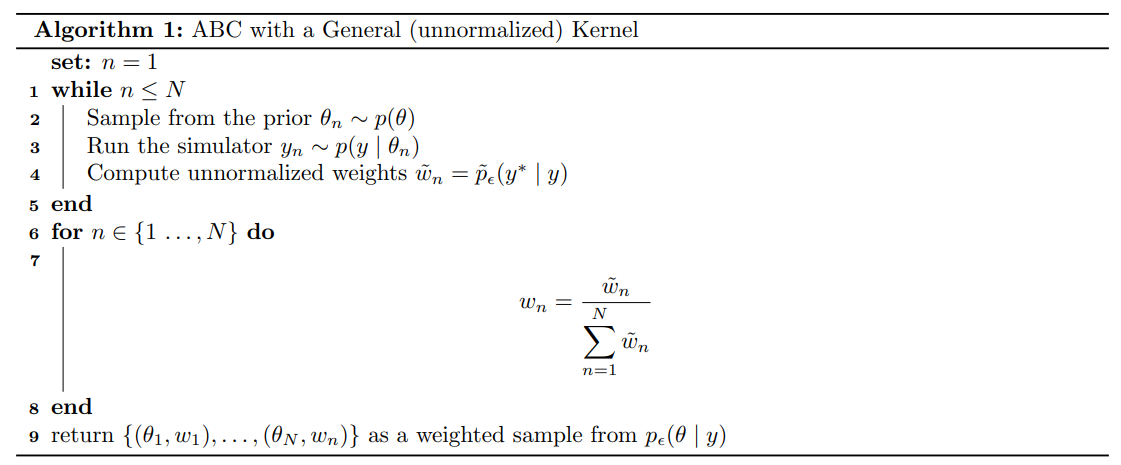

The algorithm that I hinted at in the earlier sections can be summarized as follows: At each iteration we first sample from the prior, we then run the simulator with the new parameter value and finally feed the output of the simulator in the kernel which will give us a weight representing the similarity between

This algorithm defines a posterior approximation as a mixture of Dirac deltas

In the next section, we will see a special case of this framework: Rejection ABC, where we choose the indicator function as our kernel function so that samples are assigned either a weight of

Two Moon Example

The following example is taken from ‘APT for Likelihood-free Inference’. We consider the Two Moons simulator which, given

One could code the simulator in Python using the function below

def TM_simulator(theta):

"""Two Moons simulator for ABC."""

t0, t1 = theta[0], theta[1]

a = uniform(low=-np.pi/2, high=np.pi/2)

r = normal(loc=0.1, scale=0.01)

p = np.array([r * np.cos(a) + 0.25, r * np.sin(a)])

return p + np.array([-np.abs(t0 + t1), (-t0 + t1)]) / sqrt(2)

and the Soft-ABC algorithm could like as follows:

def soft_abc(prior, simulator, kernel, epsilon, N, y_star):

"""Soft ABC algorithm."""

samples = np.zeros((N, len(y_star))) # Store samples

weights = np.zeros(N) # Store weights

n = 0 # Number of iterations

while n < N:

theta_n = prior() # Sample from the prior

y_n = simulator(theta_n) # Run the simulator

w_n_tilde = kernel(y_n, y_star, epsilon) # Unnormalized weights

# Store samples and unnormalized weights

samples[n] = theta_n

weights[n] = w_n_tilde

n += 1

# Normalize weights

weights = weights / np.sum(weights)

# Return weighted samples

return samples, weights

Using

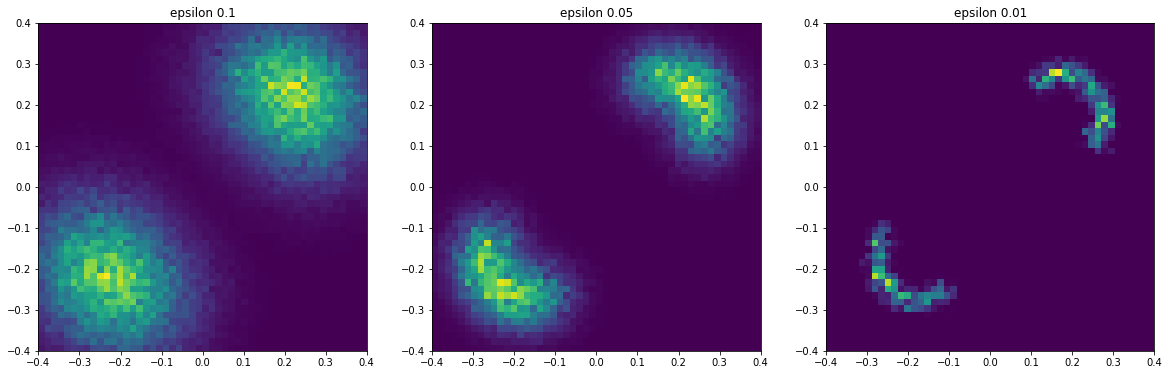

Above I have plotted the samples as histograms where the weights are given by the normalized soft-abc weights. We can see that as epsilon gets smaller, we are getting more accurate and placing more importance on data that is closer to the observed data

If you would like to play around with this example, you can find a tutorial Google Colab notebook here.