Gaussian with Unknown Variance and Unknown Mean

Finding the Objective Function

Suppose that now we want to generate samples from

Once again, we find the expression for

The average log-likelihood is then given by the following expression:

Gradient Estimates and Updates

Firstly, we take the derivative with respect to

Similarly, the derivative with respect to

This means that our updates will be

where

Coding

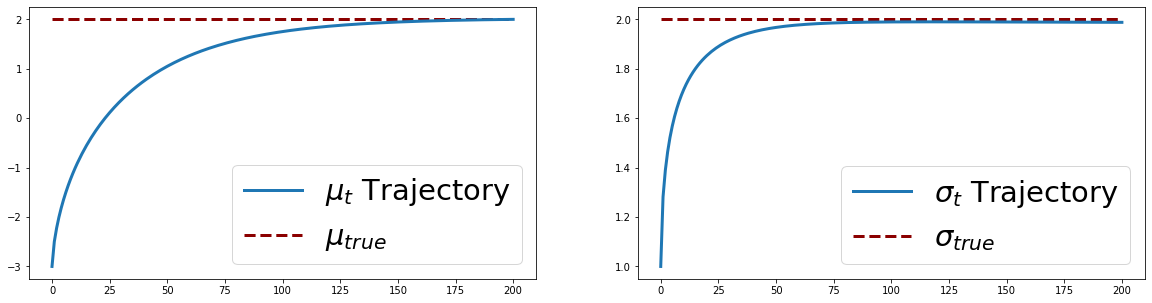

The code below is very similar to the one for example 1. The only differences are that now we have both a true

# Import stuff

import math

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as stats

# Generate data

n = 10000

mu_true = 2.0

sigma_true = 2.0

x = np.random.normal(loc=mu_true, scale=sigma_true, size=n)

# Algorithm Settings

mu = -3.0 # Start with mu = -1.0

sigma = 1.0 # Start with sigma = 1.0

gamma_mu = 0.1 # Learning rate

gamma_sigma = 0.01

n_iter = 500 # Number of iterations

# Loop through and update mu and sigma

mus = [mu]

sigmas = [sigma]

for i in range(n_iter):

# Compute gradients

mu_grad = (np.mean(x) - mu) / (sigma**2)

sigma_grad = (np.mean((x - mu)**2)/sigma**3 - 1 / sigma)

# Update mu and sigma

mu, sigma = mu + gamma_mu*mu_grad, sigma + gamma_sigma*sigma_grad

# Store mu and sigma

mus.append(mu)

sigmas.append(sigma)

fig, ax = plt.subplots(nrows=1, ncols=2, figsize=(20, 5))

# Plot mu trajectory

ax[0].plot(range(n_iter+1), mus, lw=3)

ax[0].hlines(y=mu_true, xmin=0, xmax=n_iter,

color='darkred', linestyle='dashed', lw=3)

ax[0].legend([r'$\mu_t$' + " Trajectory", r'$\mu_{true}$'], prop={'size': 29}, loc='lower right')

# Plot sigma trajectory

ax[1].plot(range(n_iter+1), sigmas, lw=3)

ax[1].hlines(y=sigma_true, xmin=0, xmax=n_iter,

color='darkred', linestyle='dashed', lw=3)

ax[1].legend([r'$\sigma_t$' + " Trajectory", r'$\sigma_{true}$'], prop={'size': 29})

plt.show()

You can find and run a working version of this Google Colab notebook. .