Sample Space

The sample space is the set of all outcomes of our probabilistic experiment.

For instance:

- Experiment: A single toss of a coin.

- Possible Outcomes: and .

- Sample Space:

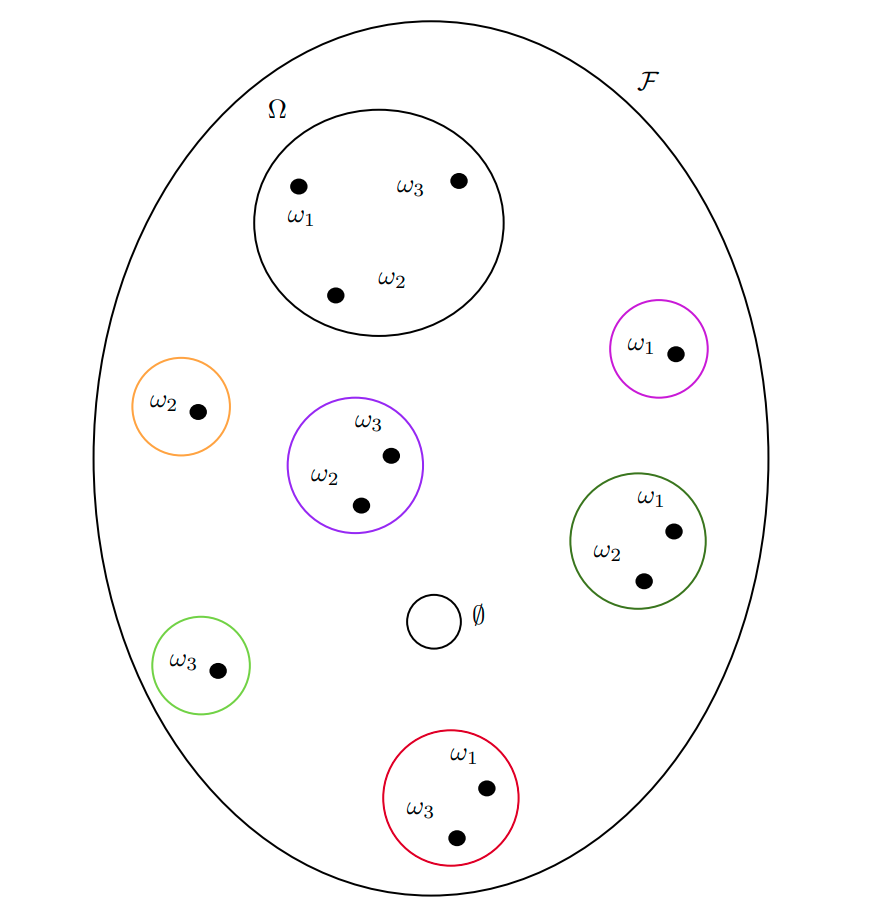

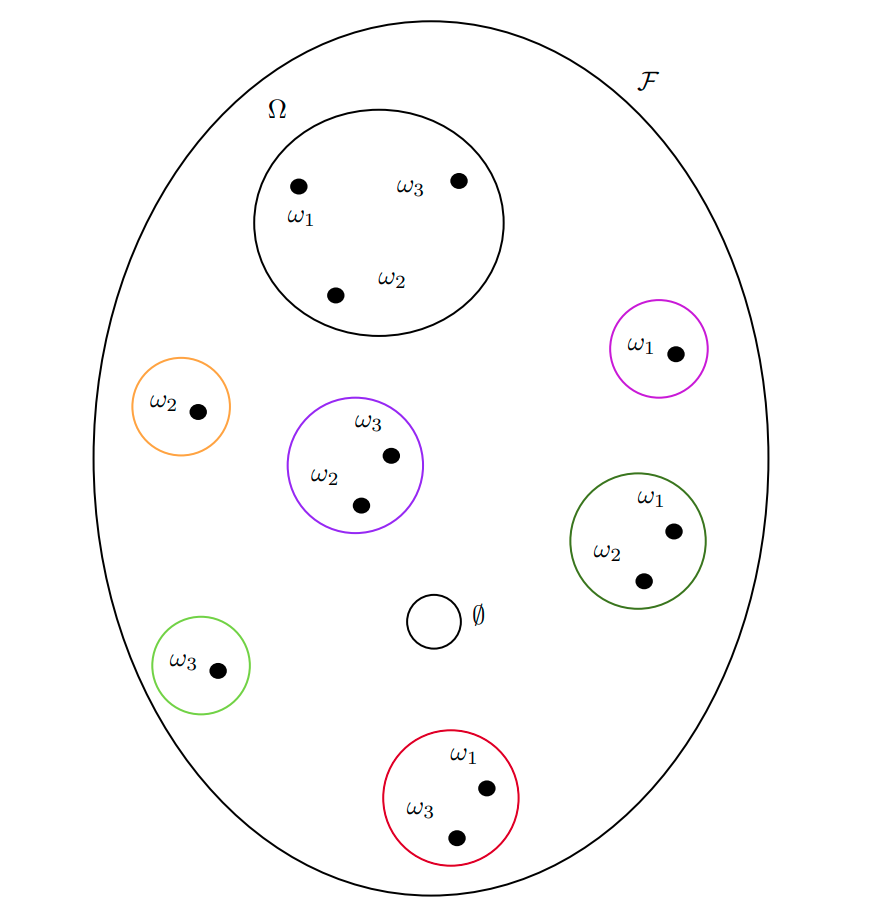

Event Space

The event space is a sigma-algebra on the sample space. It is a subset of the power set of whose elements, called events, satisfy some regularity conditions. When is finite and discrete, the power set is a valid sigma algebra that can be used as the event space.

Practically satisfying

- The sample space is an event (called the “sure” event).

- Closed under complementation.

- Closed under countable unions.

A pair of a set and its sigma algebra (in this case of the sample space and the event space) is called a measurable space. This is because we can define a function that “measures” the size of the elements of all the elements of . Indeed, any element of a sigma algebra is called a measurable set.

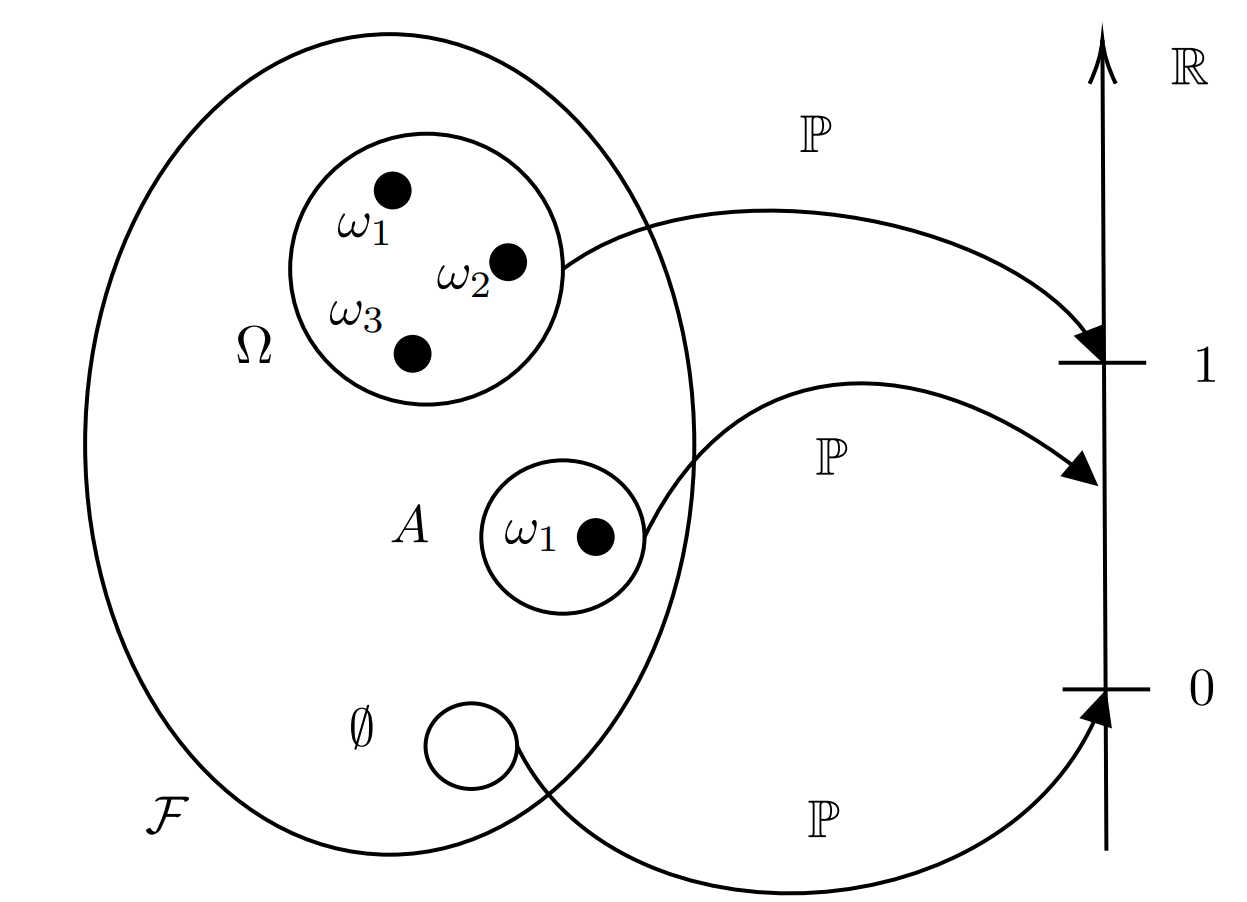

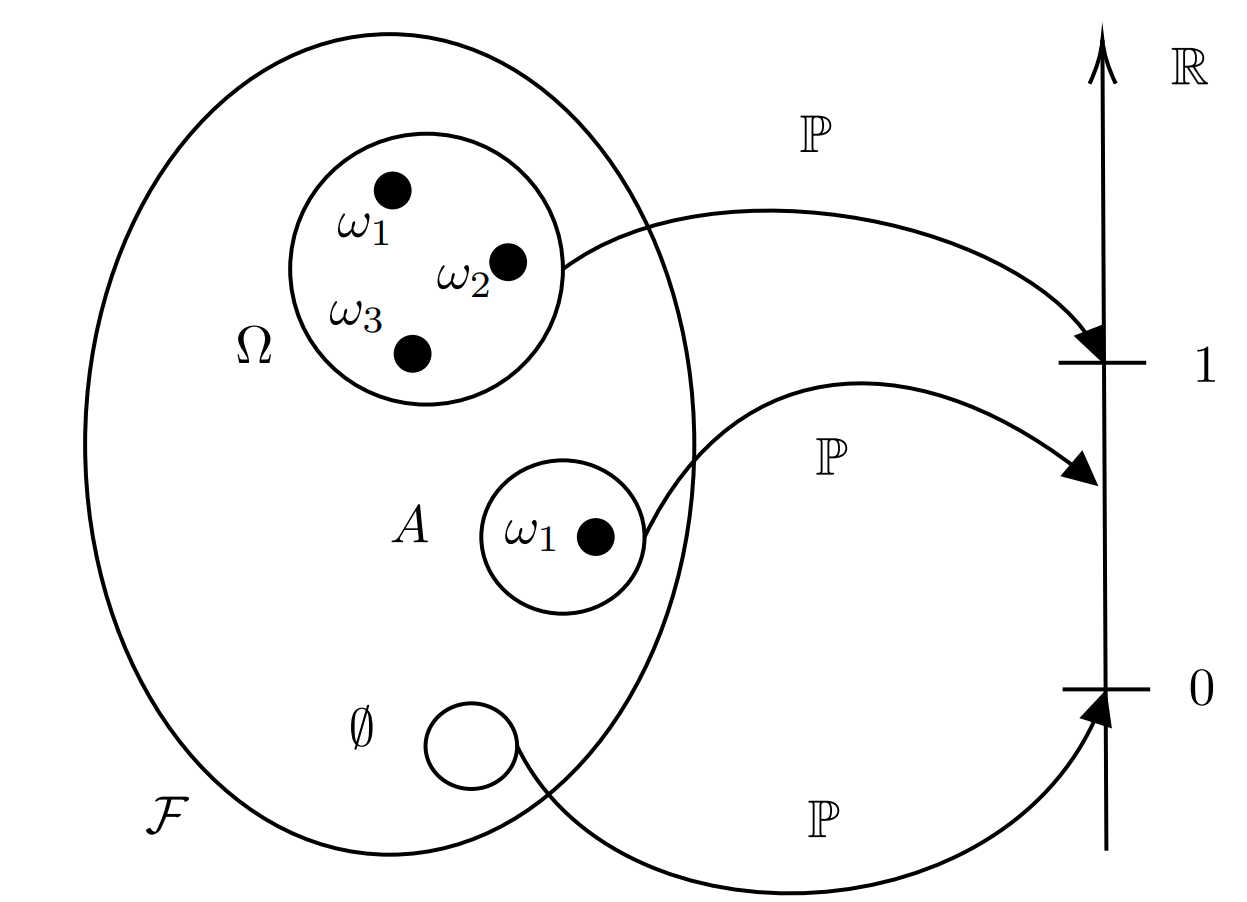

Probability Measure and Probability Space

After you’ve defined the sigma-algebra called Event Space, the next step is to define a probability space. A probability space is a triplet , i.e. it is composed of three elements, two of which we already know.

- A sample space .

- An event space which is a sigma-algebra on .

- A probability measure .

A probability measure is a special case of a measure. Suppose you have a measurable space (also referred to as ). Then a function

is called a measure on if it gives a size of zero to the empty set and if it is countably additive:

Notice how the measure takes elements of and not elements of the sample space! That is, it maps events not outcomes. A probability measure is just a measure, as above, with the additional property that it maps to rather than to . In order words, it assigns probabilities to events, hence the name.

We can see in the diagram above how the probability measure maps the empty set to zero, the sample space to and any other event to some number in .

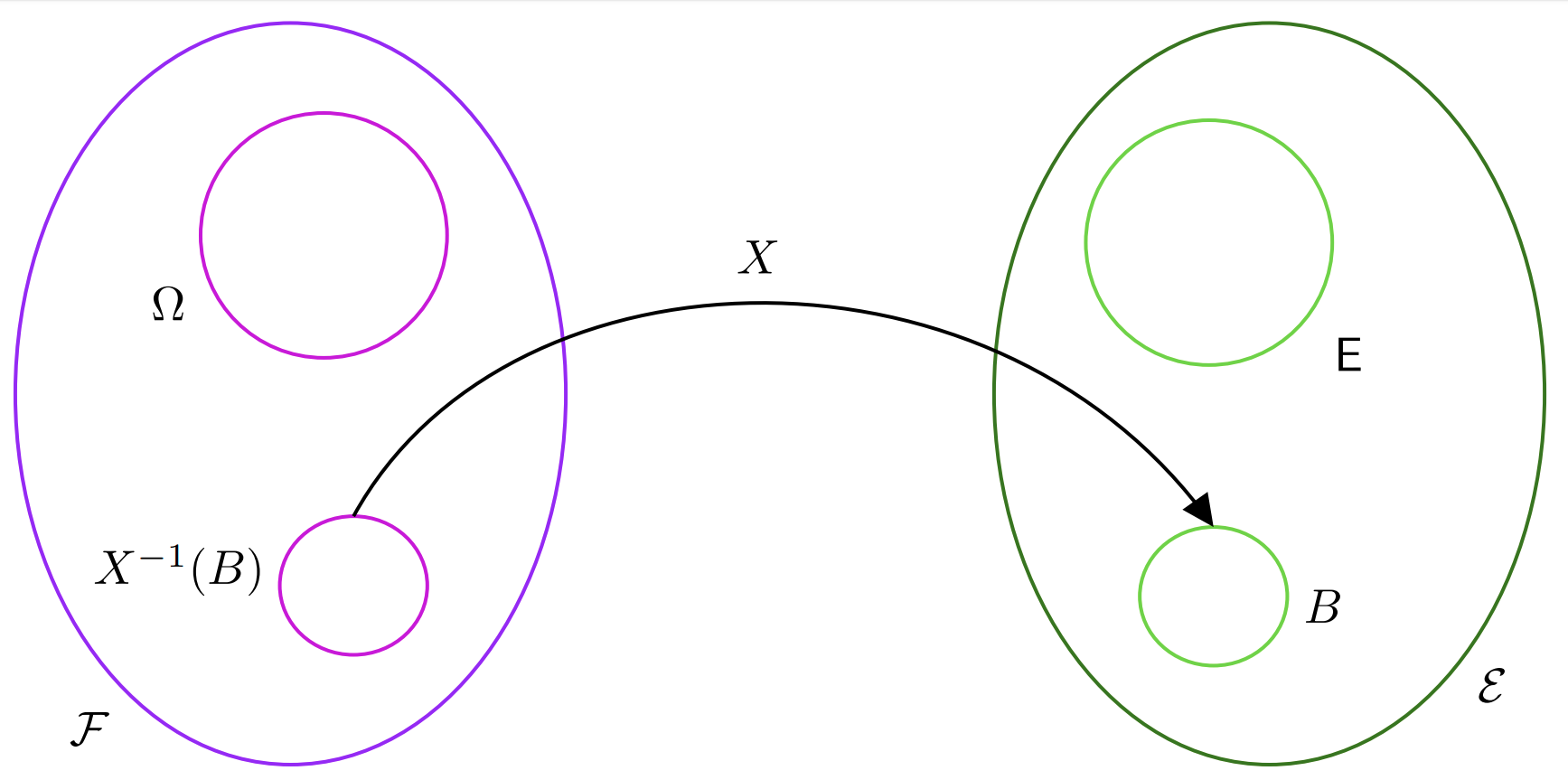

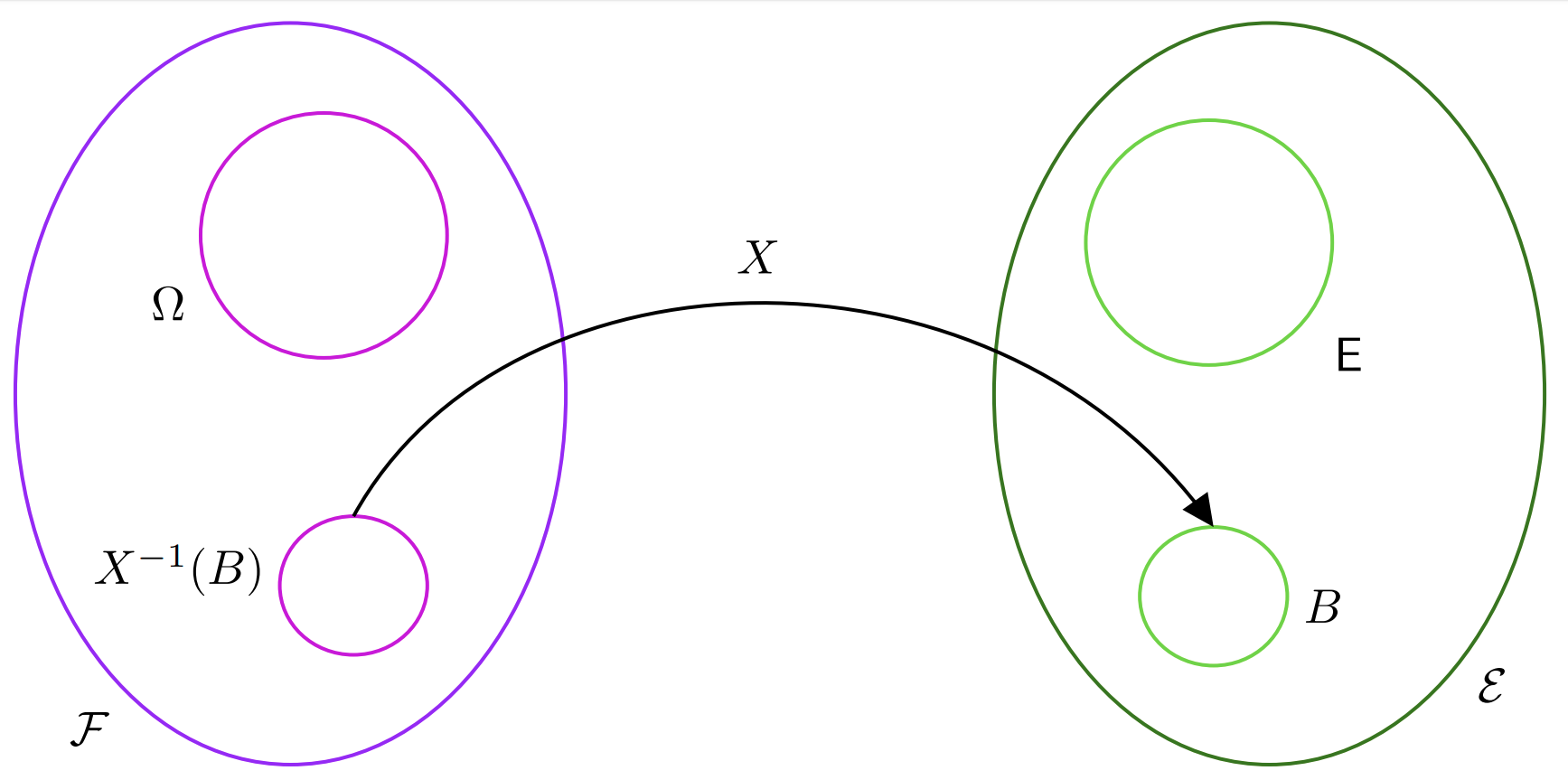

Random Variable

A random variable is also called a measurable function. Suppose you have two measurable spaces. One could be the pair of sample space and event space , and the other one could be some other arbitrary pair of a set and of its sigma algebra , although usually we choose and . Then a measurable function is a function that maps elements in to elements in with some additional properties. Notice how this function maps outcomes to elements of , it does not map events.

What property should this map satisfy? Imagine that we knew our sample space and event space, as in the coin tossing example. It might be a bit unhelpful to work on the actual sample space and event space, so instead we map them to alternative ones, that we are more comfortable using. For instance and . Then, it’s clear that we want our mapping to have a property that can guarantee us a sort of correspondence between the events in our original event space and our transformed event space . For this reason, we require our measureable function, or random variable

to be such that the preimage of any -measurable set is a -measurable set.

In the diagram above we can see how the set , which is an element of has a pre-image, which we call , which is an element of !

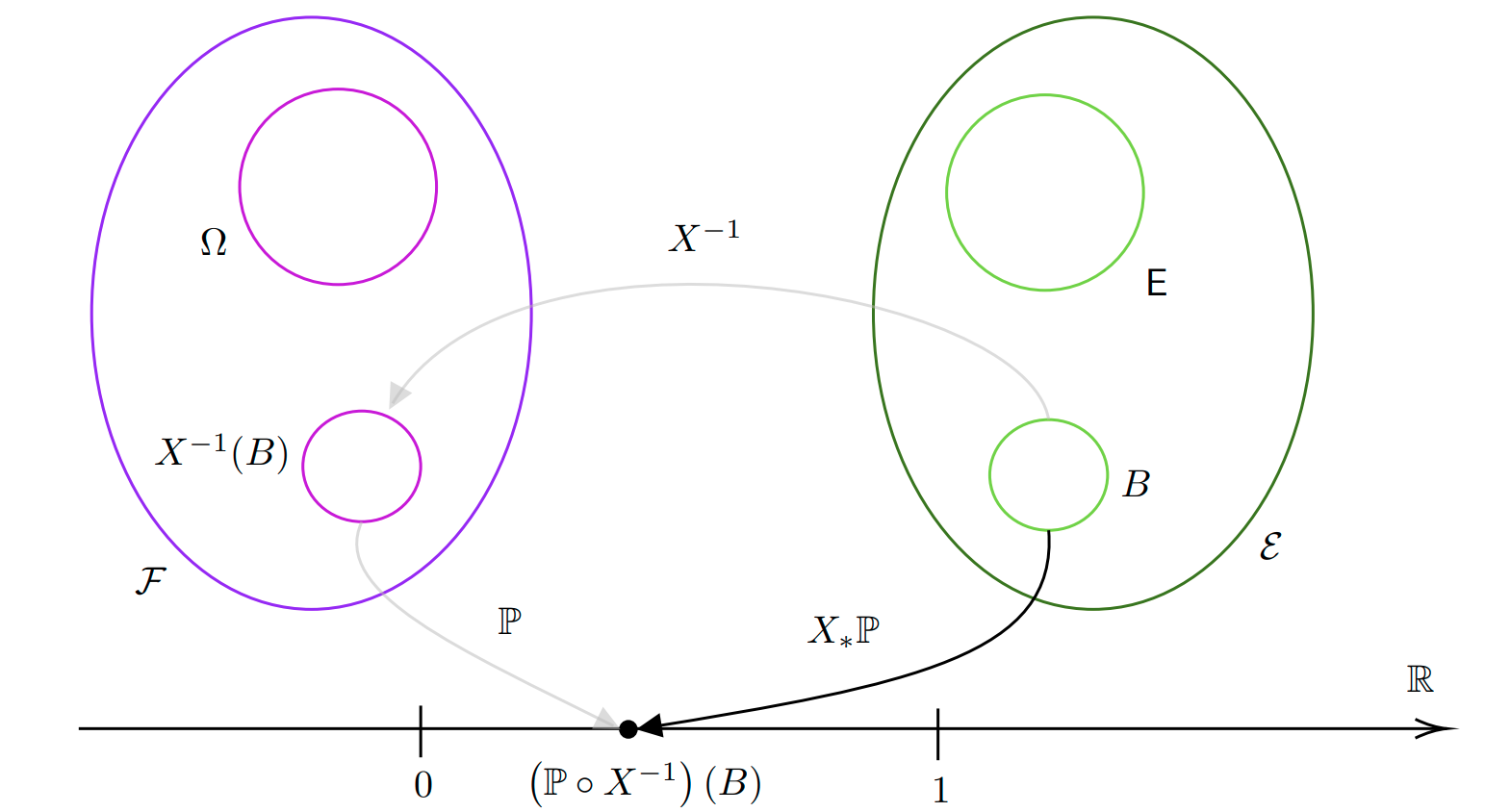

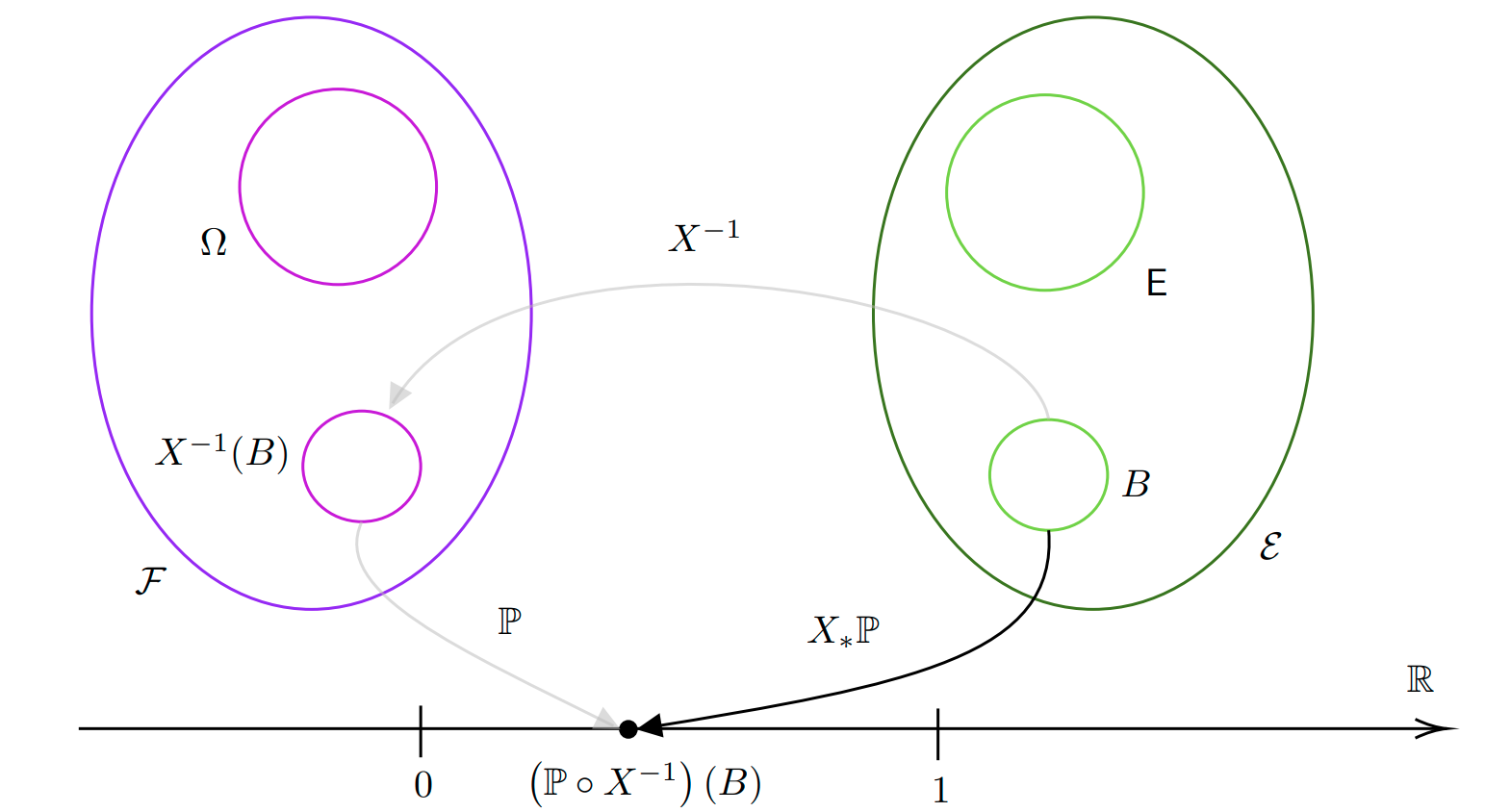

Probability Distribution

A probability distribution is also called a pushforward measure of the probability measure via the random variable . Suppose we have a probability space . This means we can assign probabilities to events in . Now suppose we have a measurable spae but we don’t yet have a probability measure to measure events in it. How can we go about measuring sets in ?

The key idea is that, given a set in , we can use a random variable to find the pre-image of such set in the event space and then measure this set via the probability measure . This will then be our probability measurament also for , as shown in the figure below.

The probability distribution, or pushforward, is then denoted as or . The probability distribution is therefore a function mapping sets in into :

In practice one often uses so we have

Probability Density Function

Consider . Suppose we have the usual Lebesgue measure on such a measurable space. This would assign to each set our usual idea of size. However, now we have the random variable and we would like to “weight” the size of a set based on the “likelihood” of it happening. To do so, we assign a density to every point in and to compute the new measure of according to we simply take the average of its density at all points in according to the Lebesgue measure

Essentially the density is almost like a derivative in the sense that it describes the rate of change of density of with respect to . For this reason, we denote the density as follows

This is called the Radon-Nikodym derivative. Using this notation is very convenient because we can use it to change the measure we are using to integrate.

The function is called the probability density function of the random variable . Notice how one can express the probability distribution also in terms of the original probability measure

Expectation, Change of Variable and Law of the Unconscious Statistician

The expectation of the random variable is given by the following Lebesgue integral

There is an important theorem called change of variable formula (i.e. LOTUS) that tells us that we can write the expectation of a tranformed random variable where in terms of the Lebesgue measure as follows

Importantly notice how the initial integral as over and now it’s over .

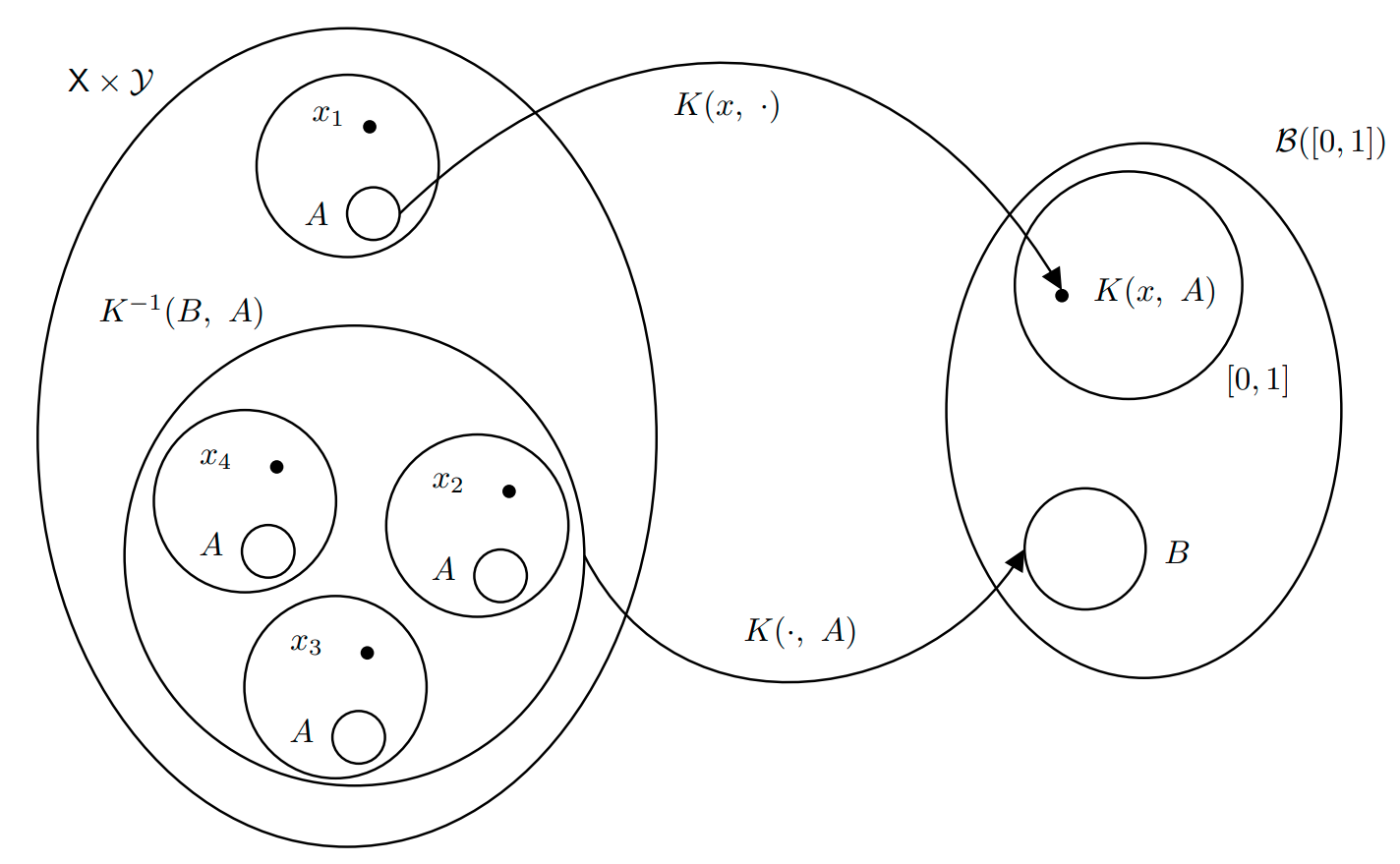

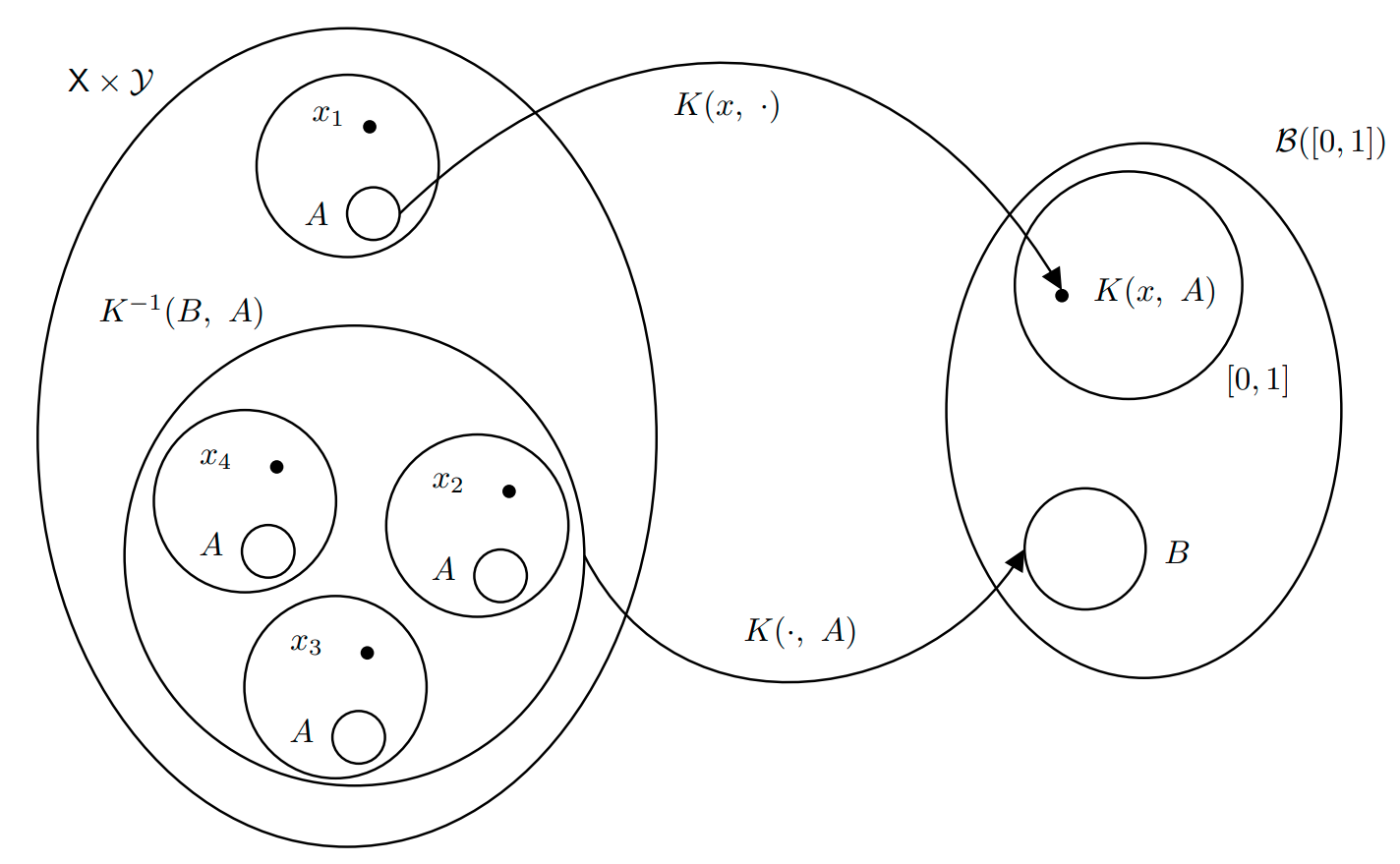

Markov Transition Kernel

Let and be two measurable spaces. A Markov Kernel is a map

satisfying the following properties:

- is a probability measure for every . In practice this means that measures the probability of jumping from to any point .

- is a measurable function. This means that given a set to jump to, the function that maps a point to the probability of jumping to any point in is measurable, i.e. a random variable.

The Markov Kernel defines two operators:

It operates on the left with measures: Let be a measure on . Then is a measure on

Basically gives you the average probability of jumping to some point where if you start from point with measure . Indeed, if we define as then we can write

It operates on the right with measurable functions

This is heavily influenced by https://www.randomservices.org/random/expect/Kernels.html.