Variational Auto-Encoders and the Expectation-Maximization Algorithm

Relationship between Variational Autoencoders (VAE) and the Expectation Maximization Algorithm

Latent Variable Models (LVM)

Suppose we observe some data

- Generative Modelling: Generating samples from the following distributions, efficiently.

- Posterior Inference: Modelling the posterior distribution over the latent variables.

- Parameter Estimation: Performing Maximum Likelihood Estimation (MLE) or Maximum-A-Posteriori estimation (MAP) for the parameter

The settings and problems described above are quite standard and of widespread interest. One method to perform Maximum Likelihood Estimation in Latent Variable Models is the Expectation-Maximization algorithm, while a method to perform posterior inference is Mean-Field Variational Inference.

Expectation-Maximization Algorithm for Maximum Likelihood Estimation

Suppose that, for some reason, we have a fairly good estimate for the parameter, let’s call this guess

- Initialize

- Until convergence:

- Compute posterior distribution of the latent variables given the observations

- Choose new parameter value

- Compute posterior distribution of the latent variables given the observations

Problem: The EM Algorithm breaks if

are intractable.

Mean-Field Variational Inference for Posterior Inference

A well-known method, alternative to MCMC, for posterior inference if Mean-Field Variational Inference. In Variational Inference we essentially define a family of distributions that we think is somehow representative of the true posterior distribution

We can see that each factor in the product on the RHS is described by a vector of parameters

Problem: It clearly doesn’t scale well with large datasets and it breaks if

are intractable.

Ideally, we would like to use only one vector of parameter

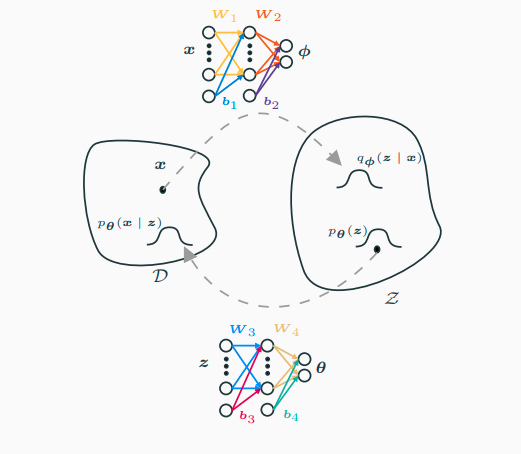

Variational Autoencoders at a Glance

So what are Variational Autoencoders or Auto-Encoding Variational Bayes?? Below is a summary of what they are and what they are used for.

- What is AEVB used for? Inference and Generative Modelling in LVMs.

- How do AEVBs work? Optimization of an unbiased estimator of an objective function using SGD.

- What are VAEs? They are AEVB where the probability distributions in the LVM are parametrized by Neural Networks.

Auto-Encoding Variational Bayes Objective Function

In this section, we derive the objective function of AEVB. First, let us introduce a so-called recognition model

Notice how we managed to write the KL divergence in terms of the log marginal likelihood

For this reason, we call

Why is it useful to find a lower bound on the log marginal likelihood? Because by maximizing the ELBO we get two birds with one stone. First of all, notice how by maximizing the ELBO with respect to

Repectively, such maximization have a very practical results:

- The generative model

- The approximation

Lastly, one can also write the ELBO in a different way. This second formulation is often convenient because it will tend to have estimates with lower variance.

As can be seen above, the ELBO can be written as a sum of two terms: expected reconstruction error and the KL divergence between the approximation and the latent prior. This KL-divergence can be interpreted as a regularization term trying to keep the approximation close to the prior. This regularization term can sometimes backfire and maintain the approximation to be exactly equal to the prior. To deal with such scenarios, called posterior collapse, people have been developing new methods, such as delta-VAEs.

Optimization of the ELBO Objective Function

One way to optimize the ELBO with respect to

This means that when taking the gradient of the ELBO with respect to

Can we write the expectation with respect to a distribution that is independent of

?

If we think about it, we already know a special case in which this can be done. For instance, suppose that the distribution

More generally, we can write a sample

This is called the reparametrization trick. At this point we can finally obtain unbiased estimators of the ELBO (or equivalently, of its gradients) based on

Then to perform Stochastic Gradient Ascent we simply sample a mini-batch of data

We call this Auto-Encoding Variational Bayes.

Estimating the Marginal Likelihood

After training, one can estimate the log marginal likelihood by using importance sampling

Variational Autoencoders

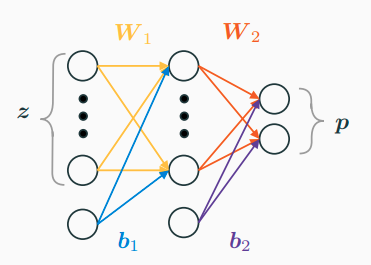

Before introducing what a Variational Autoencoder is, we need to understand what we mean when we say that we parametrise a distribution using a neural network. Suppose that

and the log-likelihood is, of course

A Variational Autoencoder is simply Auto-Encoding Variational Bayes where both the approximating distribution

Relationship between EM algorithm and VAE

So what is the relationship between the Expectation-Maximization algorithm and Variational Autoencoders? To get there we need to understand the EM algorithm in terms of Variational Inference. That is, we need to understand how the EM algorithm can be cast into the framework of variational inference. Recall that the EM algorithm proceeds in the following two steps:

- Compute “current” posterior (which we can call approximate since

- Find optimal parameter

Now consider the ELBO in its two different forms, but now rather than considering it as a function of

We can find two identical steps as those of the EM algorithm by performing maximization of the ELBO with respect to

- E-step: Maximize

- M-step: Maximize

The relationship between the Expectation Maximization algorithm and Variational Auto-Encoders can therefore be summarized as follows:

- EM algorithm and VAE optimize the same objective function.

- When expectations are in closed-form, one should use the EM algorithm which uses coordinate ascent.

- When expectations are intractable, VAE uses stochastic gradient ascent on an unbiased estimator of the objective function.

Bibliography

“VAE = Em.” 2017. Machine Thoughts. https://machinethoughts.wordpress.com/2017/10/02/vae-em/.

n.d. The Variational Auto-Encoder. https://ermongroup.github.io/cs228-notes/extras/vae/.