Discrete Distributions, PMF and CDF

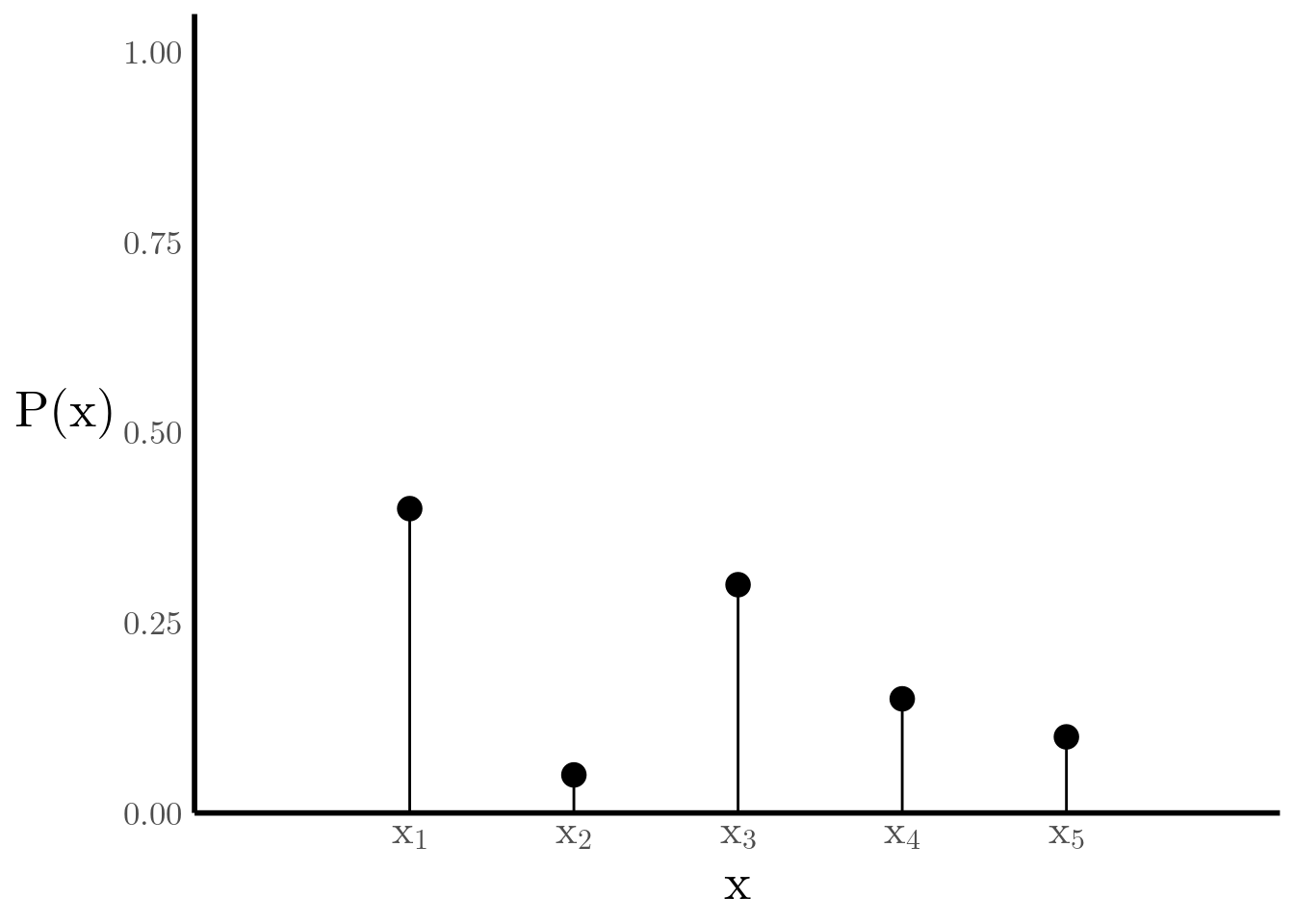

Suppose we have a discrete random variable taking different values

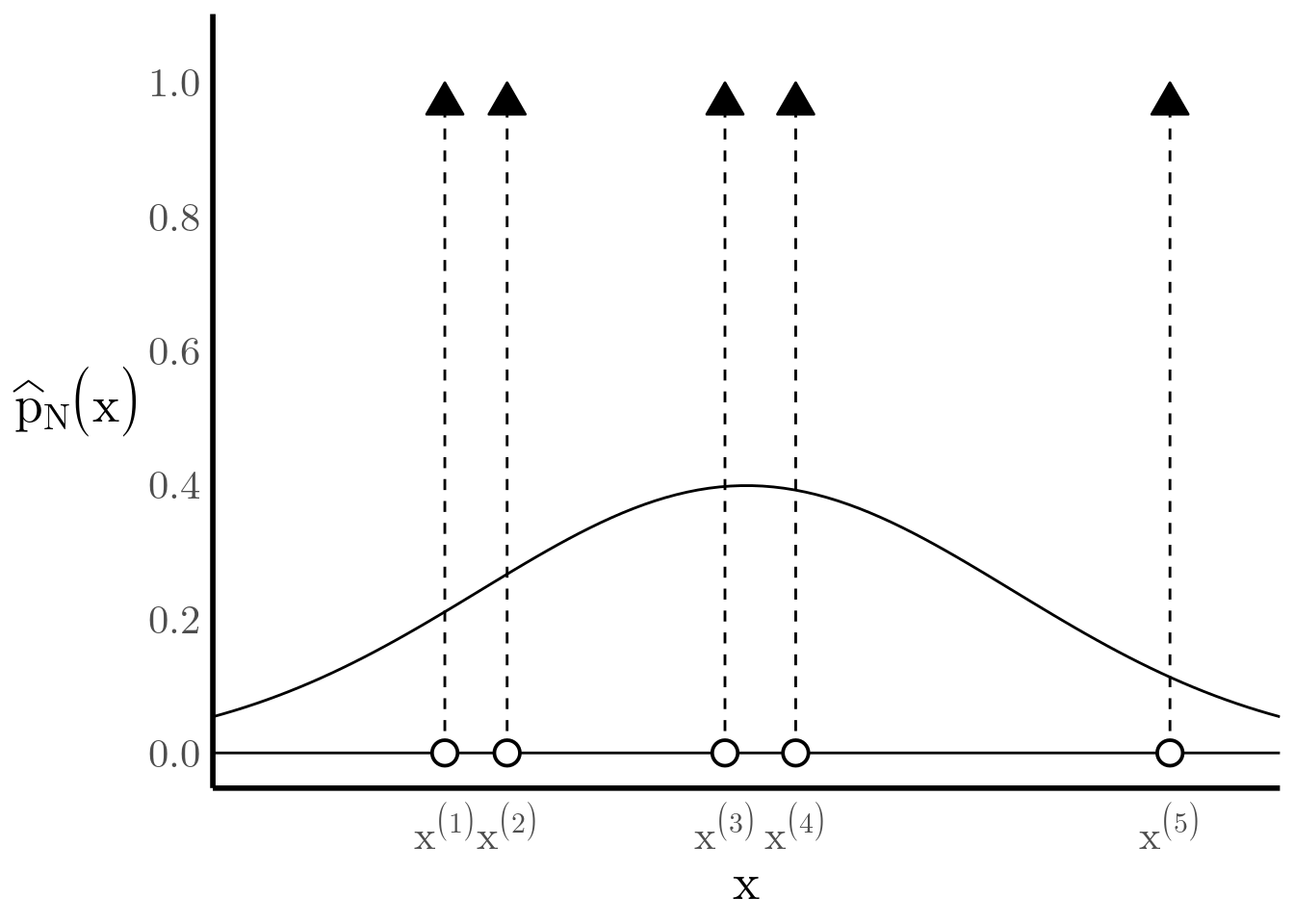

where and . It’s probability mass function is then equal to at and everywhere else, as shown in Figure 1

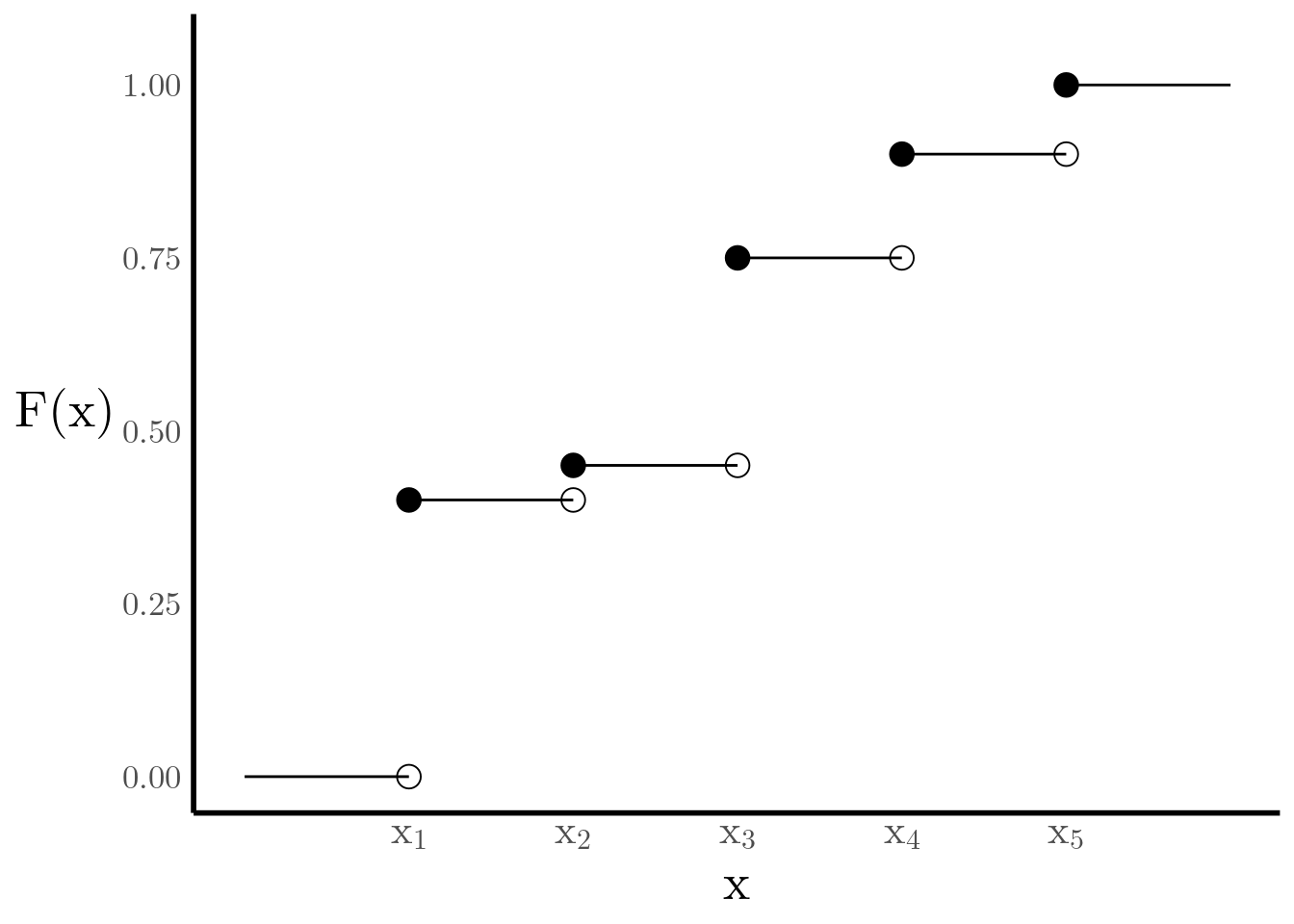

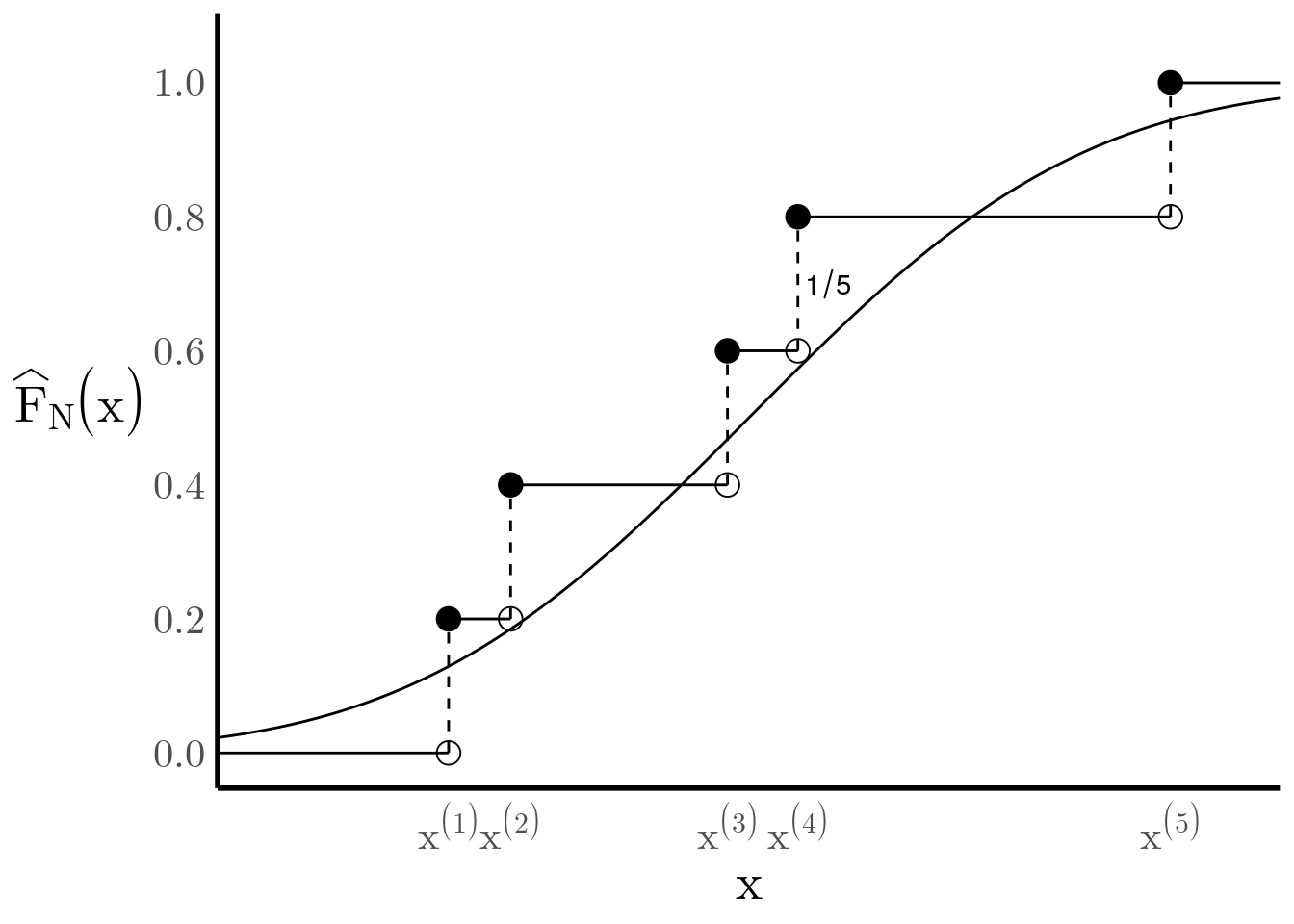

The cumulative distribution function of , denoted , gives us the probability that the state of is less than or equal to (see Figure 2)

Rewriting Discrete Distributions as Continuous Distributions

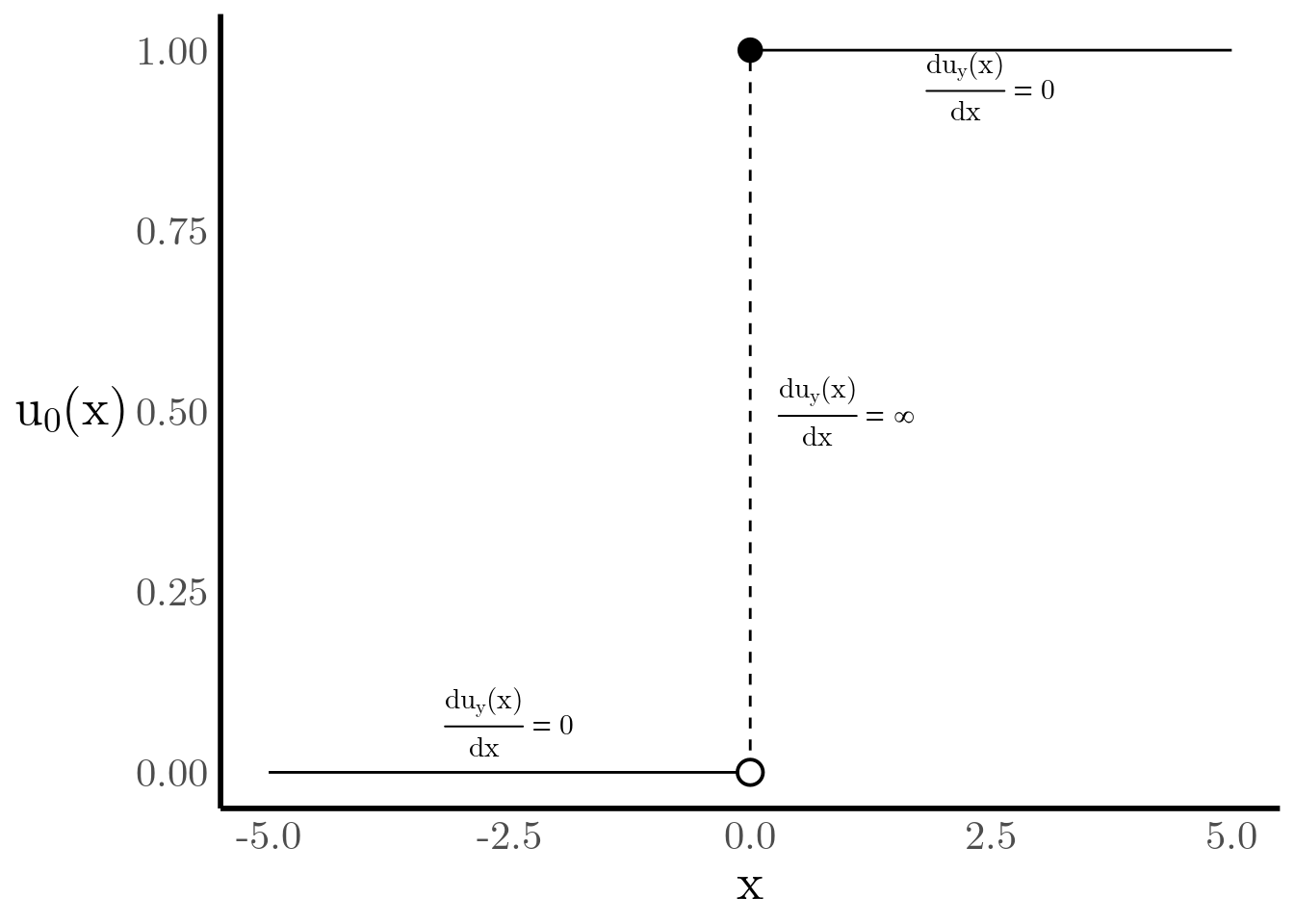

We can also express the CDF as a sum of step functions. For this purpose we define

and then rewrite the CDF as follows

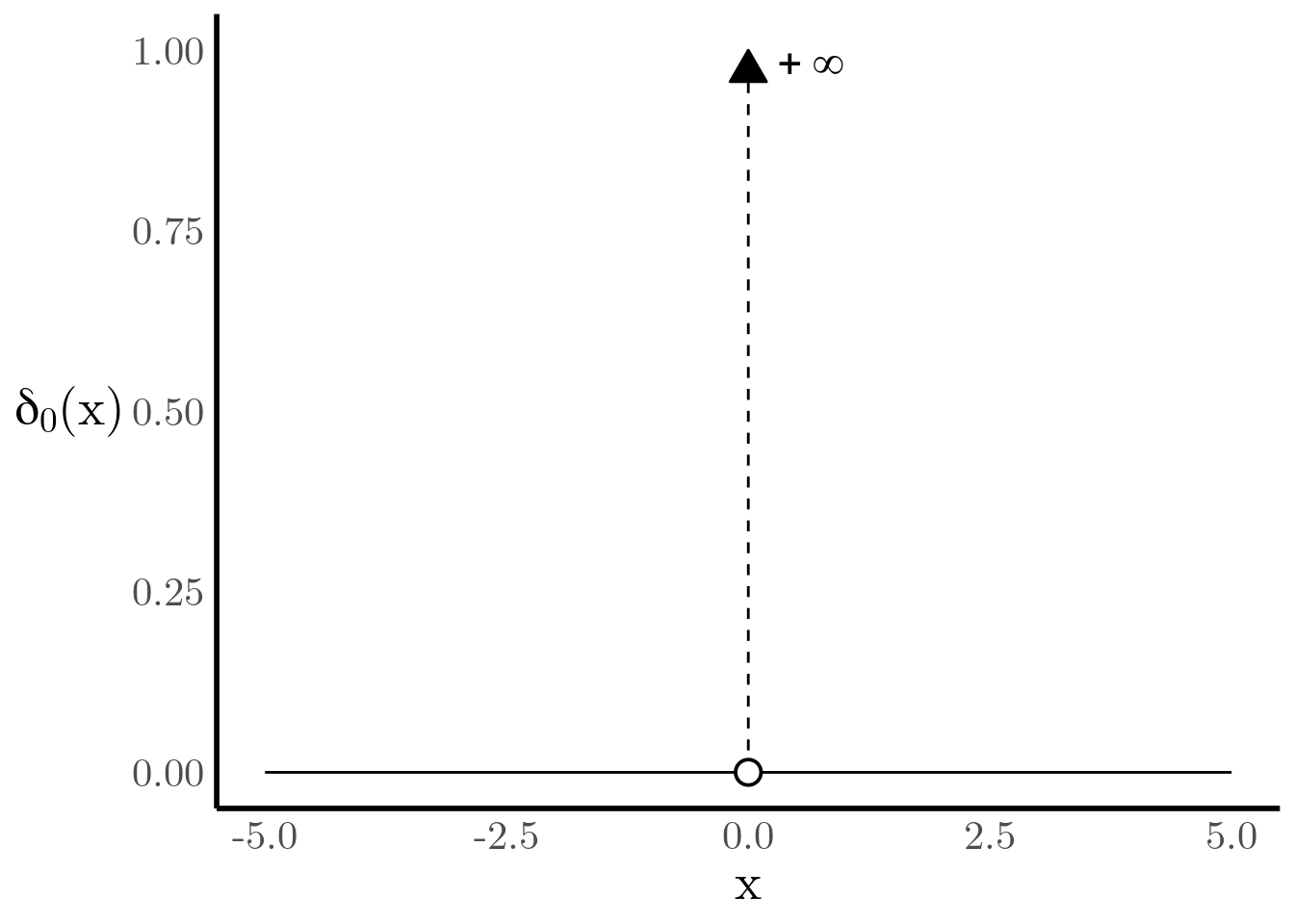

This way of writing the CDF is more useful because, if we can find a suitable derivative for the step function, then we can find an approximation to the PDF. Unfortunately, the step function has a discontinuity at . The solution to this problem lies in defining the derivative of the step function ourselves. We call this the Dirac Delta function and it satisfies the following properties (for any function )

Now we can find an approximate continuous PDF for by taking the derivative of

##Continuous approximation of an Empirical PDF

Suppose now that we obtain realizations of a sample from a probability density function . Since we only have a finite number of such samples, we need to find a way of obtaining an approximate representation of from them. One step towards this goal is to construct the empirical distribution function

which essentially uses the indicator function to count how many observations of the sample are less than or equal to and then divides it by , the number of samples. Another way of interpreting it is that is assigns probability to each sample. Practically, this means that will be conceptually similar to Figure 2, but with jumps of at every sample.

For instance, suppose that we sample times from a standard normal and we sort the samples in increasing order, as shown in Figure 5.

We can build a CDF with jumps of at every sample (see Figure 6), and we can see how it gives a decent approximation of the true CDF. Importantly, by the Glivenko-Cantelli theorem we know that the empirical CDF converges to the true CDF as increases.

At this point, we can follow the same steps as above: rewrite the empirical CDF as a weighted sum of step functions, and then take the derivative to find an approximate PDF using the Dirac-delta function.