Reinforcement Learning from Human Feedback

Reinforcement Learning from Human Feedback (RLHF) was introduced in the InstructGPT paper. Suppose that

Reinforcement Learning Recap

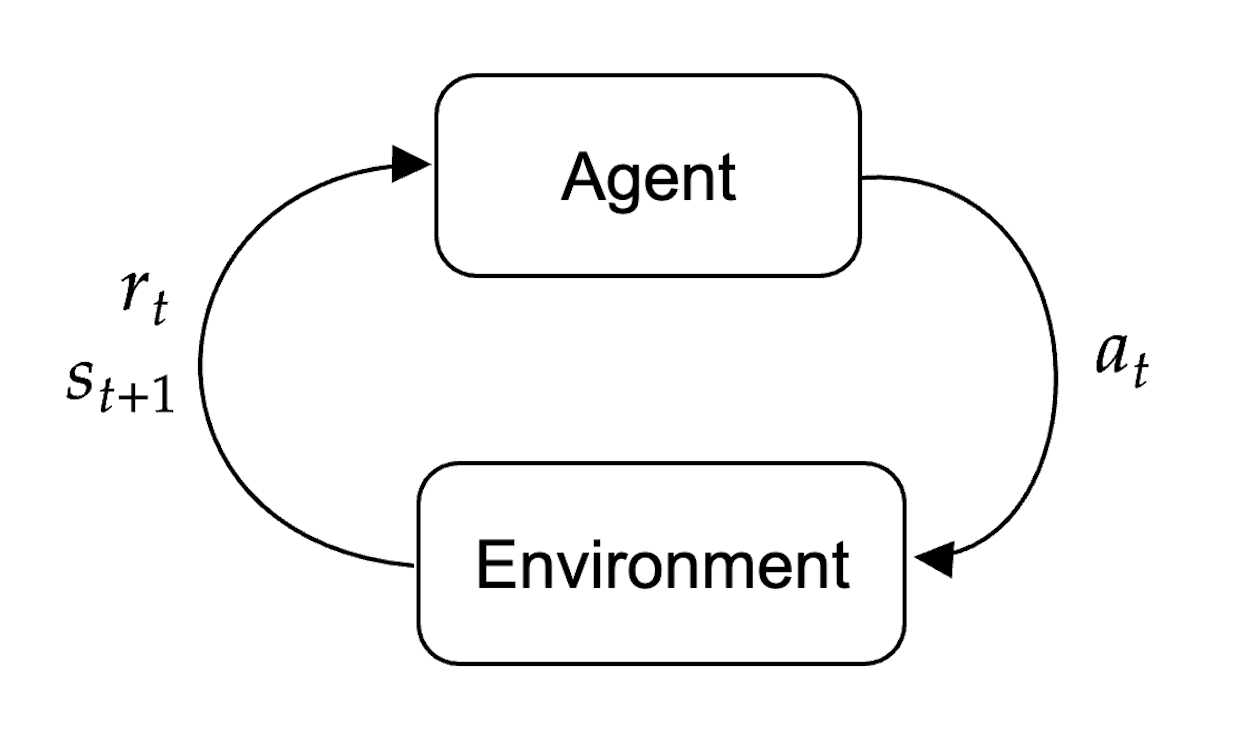

Reinforcement learning works as follows. In an environment with state

The aim is to learn a policy such that the expected reward is maximized

- Agent: Pre-trained and fine-tuned Language model

- State: Prompt

- Policy: Next-token distribution given the prompt

- Next-state: Sample from the categorical distribution represented by the LM

Baseline, Policy and Reward Models

We take our SF-tuned language model

- Baseline

- Policy

- Reward Model

However, in the original InstructGPT paper they said that for

Dataset of Human Preferences

For the next step, we focus exclusively on

- For each prompt of interest

- Ask human labelers to rank all the resulting pairs of outputs (notice that there are

This gives us the following human preferences dataset

Training the Reward Model

The reward model will take pairs

Notice that the preference distribution (and so the loss) depends on the difference between the winning reward and the losing reward and it is therefore shift-invariant. After the reward model has been trained, the authors compute the average reward

Reinforcement Learning

Now that we have available a reward model, we can use reinforcement learning to change the parameters of our policy